Introduction

Part 1: Introduction to Probability

Chapter 1: What Do You Believe and How Do You Change it?

Chapter 2: Measuring Uncertainty

Chapter 3: The Logic of Uncertainty

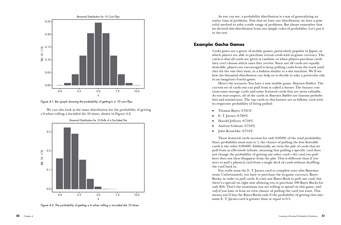

Chapter 4: Probability Distributions 1

Chapter 5: Probability Distributions 2

Part 2: Bayesian Probability and Prior Probabilities

Chapter 6: Conditional Probability

Chapter 7: Bayes' Theorem with LEGO

Chapter 8: Posterior, Likelihood, and Prior

Chapter 9: Working with Prior Probability Distributions

Part 3: Parameter Estimation

Chapter 10: Intro to Parameter Estimation

Chapter 11: Measuring the Spread of Data

Chapter 12: Normal Distribution and Confidence

Chapter 13: Tools of Parameter Estimation

Chapter 14: Parameter Estimation with Priors

Part 4: Hypothesis Testing: The Heart of Statistics

Chapter 15: From Parameter Estimation to Hypothesis Testing

Chapter 16: Comparing Hypotheses with Bayes Factor

Chapter 17: Bayesian Reasoning in the Twilight Zone

Chapter 18: When Data Doesn't Convince You

Chapter 19: From Hypothesis Testing to Parameter Estimation

Appendix A: A Crash Course in R

Appendix B: Enough Calculus to Get By